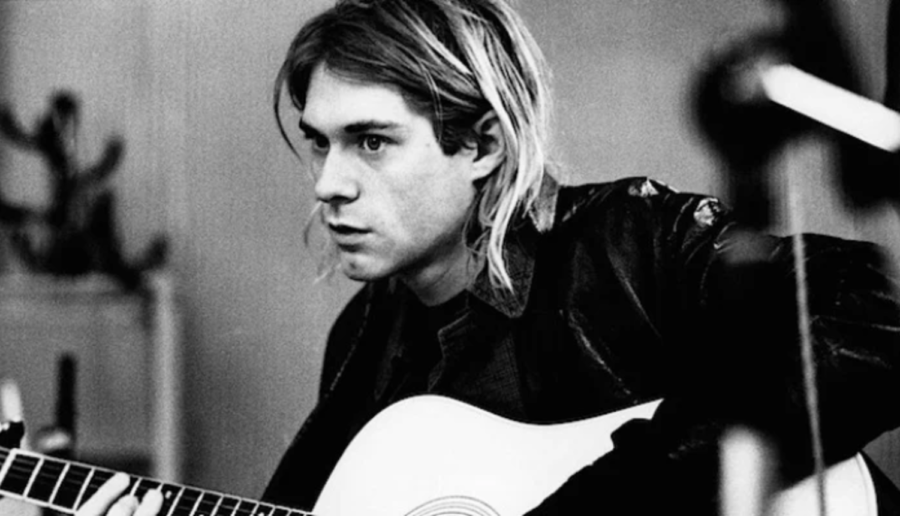

It’s 2050. You scored pit tickets for a Nirvana concert a few months ago. Kurt Cobain — yes, the iconic musician who died of suicide fifty-six years ago — is ten feet away, playing his brand-new song for the first time. Is this the future of music?

In January 2021, a mental health organization called Over the Bridge released a potentially revolutionary album titled Lost Tapes of the 27 Club. The album consists of four songs in the iconic styles of Kurt Cobain, Jim Morrison, Jimi Hendrix and Amy Winehouse — four distinguished and beloved musicians who all tragically died at the young age of 27.

Here’s the catch: almost everything about the album was generated by Artificial Intelligence (AI). Using Google’s Magenta AI along with a generic neural network, members of the organization attempted to, in their words, “create the album lost to music’s mental health crisis.”

The “27 Club” is the name often used in popular culture to describe the group of musicians who died at age 27 due to alcohol and drug overdose. Kurt Cobain, Jim Morrison, Jimi Hendrix, Brian Jones and Janis Joplin are among the many who comprise this unfortunate group. Through the album, Over the Bridge seeks to raise awareness surrounding mental health in the music industry.

Ever since the deaths of these venerated musicians, dedicated fans have been left wondering what their music could have been. The work of Over the Bridge and AI opens up the question: what can their music still be?

The Lost Tapes project website outlines the three main steps of creating this music. First, the team fed Magenta isolated MIDI files of hooks, solos, rhythms and melodies from 20-30 songs from each artist. The AI algorithm then analyzed the artists’ styles, musical preferences and rhythmic tendencies to generate a completely new string of hooks, solos, rhythms and melodies. Finally, the creative team selected the most interesting sections to ultimately record. A similar process was followed to generate lyrics.

The album is impressive. Each song undoubtedly resembles the artist which it seeks to emulate. The “Nirvana” song “Drowned in the Sun” begins with a catchy guitar riff reminiscent of the famous single “Come as You Are” and builds to a more “Lithium”-style overall grunge feel. “The Roads are Alive,” in the style of The Doors, sounds like a song that didn’t quite make the cut on Morrison Hotel. A Ray Manzarek signature Vox Continental organ sound permeates the instrumentals, the lyrics are just peculiar enough to be Morrison’s and a bluesy rock feel characterizes the song.

While the work of Google’s Magenta AI is nowhere near perfect, it is remarkable to hear brand new music that closely resembles the iconic sounds of a few of the most extraordinary musicians of all time.

There is, however, a question of legality that arises when considering the potential for AI to become a more extensive influence on the music industry. Right now, copyright laws cover musical composition including lyrics and musical arrangement like notes and rhythms. If work similar to the Lost Tapes project becomes more prevalent, it is uncertain whether replicating the sound of a particular artist or band using AI software will remain legal. A change in copyright law could eventually become necessary.

Another concern regarding the Lost Tapes project involves the role of human creativity in art. The arts have long been regarded as very human domains. If AI can create music that is equal to, if not better than, music created by living human minds, what is the future of the music industry? Further, what is the future of creativity?

Many people, especially the artistically inclined, listen to music with the knowledge that dedication and deep emotion is poured into the lyrics, melodies, rhythms and riffs. If a song holds origins in software algorithms rather than real emotional truths, can it still hold poetic and musical meaning? The answer is debatable. Some could argue that music should remain a human creation for this very reason.

Others listen to music simply because it’s enjoyable; they are perfectly content without delving into the musicality and emotional meaning of a given song. If an AI algorithm can create a tune that appeals to the general public based on trends, it may become a very effective way for organizations to make money in the music industry. In this case, what would become the role of the artist? To select the most interesting pieces created by a computer? It may sound extreme, but AI is certainly a powerful tool that money-seeking companies will have no problem utilizing to create music that allures the general population of music listeners.

There is certainly reason to pause and consider the potential effects of the Lost Tapes project and other AI endeavors on the future of the music industry. However, there remains comfort in knowing that although AI can create based on past trends, as of now, it cannot truly create new and innovative styles. This level of creativity, for now, remains a human skill.

Madeline Fabian can be reached at [email protected].