The start of the academic year brings with it a new onslaught of students taking standardized exams such as the SAT, ACT, GRE, LSAT, MCAT, GMAT and more. These standardized exams nominally provide a way to measure students’ performance and likelihood to succeed in college or graduate school. A high score should indicate which students are more likely to succeed, and likewise for low scores. However, reality isn’t quite that simple.

GRE scores, for instance, serve only as a weak-to-moderate predictor of grades in graduate school. The College Board likes to promote the idea that high SAT scores are a predictor of a high college grade point average. However, it turns out that even high school GPA has more predictive power than SAT scores when it comes to predicting college success. This makes sense—the best indicator that somebody is likely to fare well in long-term academic performance should be their past long-term academic performance.

The results of a standardized exam may represent the product of months of preparation, but ultimately, the numerical score still only represents an individual’s performance on a given day. Two equally prepared students could take the same exam and score differently solely due to random variation. Perhaps one of them is having an “off” day, or one of them is given a harder set of questions. If colleges absolutely need to boil a person’s academic worth down to a single number, it would make much more sense to boil it down to a number that has better power to predict success.

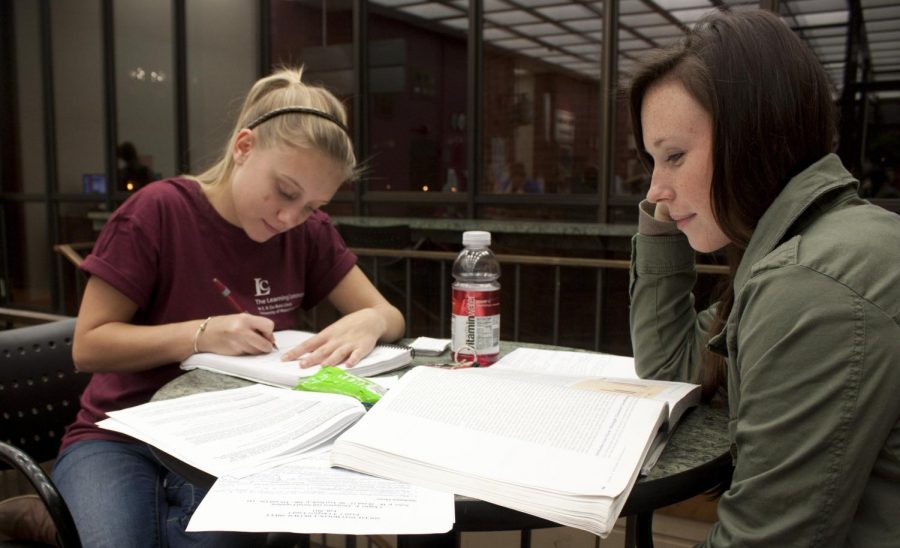

This isn’t to say that standardized test scores have no predictive power. In fact, they are good at predicting one thing: family income. Students from more affluent families score higher on standardized exams because they are more likely to pay hundreds to thousands of dollars on preparation materials, tutors and courses. They are also more able to take time off from working in order to focus on studying. The results of their exams do reflect on the product of their hard work, but it’s a product that not everyone has equal access to.

Standardized tests aren’t even necessarily good at indicating subject matter knowledge. Otherwise qualified individuals who suffer from test anxiety will score worse than their peers who don’t. While it’s true that colleges require objective criteria for filtering down their applicant pool, relying on test scores only furthers the gap between rich and poor. The process also punishes qualified, knowledgeable applicants who are simply unable to perform well on standardized tests.

This is especially ironic given colleges’ lip service to inclusion and diversity. In 2015 the Medical College Admission Test nearly doubled its length by adding new sections for biochemistry, psychology and sociology. The irony is that their new content guidelines require students to understand institutional factors that lead to social stratification. Yet, they seem to miss the irony that by increasing the stakes for the exam, they simply widen the achievement gap between advantaged and disadvantaged groups. Similarly, colleges love to tout their diversity statistics, but refuse to take measures that would actually increase diversity, such as making test scores an optional part of the application.

This isn’t to suggest that colleges should do away with the idea of numerical criteria altogether. For schools with large applicant pools, it’s important to have an objective number to filter down the pool before looking at the more subjective parts of a student’s application. In fact, a reliance that is too heavy on subjective criteria can lead to discrimination due to implicit biases, such as when Harvard admissions officers rated Asian applicants lower on their subjective personality scores, leading to admission discrimination that is now the subject of a high-profile lawsuit. Colleges and universities definitely need to rely on some objective criterion, but testing companies have a vested interest in keeping these institutions dependent on their highly flawed product.

What should be done to fix this system? Obviously, it’s hard to say for sure, or else someone would have already done it. One way to lessen the impact of a standardized test score would be for testing companies to report not only the final score, but also a confidence interval giving a likely range of possible scores that an applicant could have also gotten. This would reduce the impact of variations on the test date. They could also focus on making their exams shorter and more practical. The Princeton Review’s SAT prep website states that, rather than being a test of general intelligence or college success, the SAT really only measures how good someone is at taking the SAT. Reforming the exam questions to test concepts in a more straightforward, content-based manner like the exams for Advanced Placement courses would make standardized tests a better indicator of potential success.

Admissions is a complicated game, and it’s doubtful that we’ll ever see standardized testing disappear completely. While more colleges are becoming test-optional for undergraduate admissions, standardized testing is still entrenched in post-graduate admissions. If admissions officers started putting more focus on students’ practical experience and long-term potential, university admissions could become a far more equitable way to pick the best and brightest students.

Edridge D’Souza is a Collegian columnist and can be reached at [email protected].

amy • Sep 25, 2018 at 10:18 am

Standardized tests are a way to asses the intelligence of applicant. I hate to break it to people; college is only for the intelligent. There is walmart, factories, uber and ordinary jobs for the average to below average.

When you dilute and diminish standards to get in; then that not only diminishes the value of education and college but it also will eventually diminish how employers perceive college graduates.

A stupid person is stupid; an average person is average; putting through four years of college isn’t going to make them smarter or better or more qualified. You already see this with less and less employers requiring a college degree to get hired.

I think we need two create two college systems. One college system for liberals who want to include everyone regardless of merit and turn these colleges into social diversity incubators and have students be future socialists, don’t provide an education or prepare the student for a job or to do anything substantive with their life. I guarantee a college that was entirely ran by liberals would plummet in rankings and be a laughing stock.

Then we should have another college that is merit-based; seeks intelligent students, doesn’t in any way brainwash students, actually provides an education(which is objective and based around knowledge) and also you can have within that college a very practical element for students that are less cerebral but still capable like business school students.

I think this is fair; intelligent, capable students at umass shouldn’t be dragged down to be blunt about by the losers. We shouldn’t be a dragged down by a political administrator/ professors who want to diminish standards and by students who only got in because they marked some special box not because they earned it.